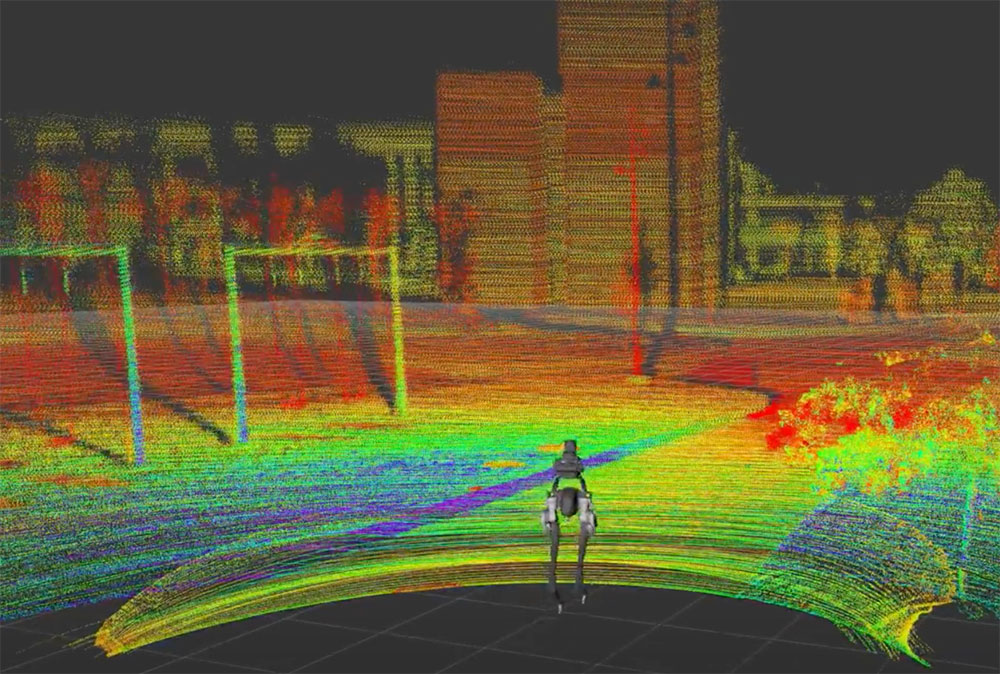

Sensing with LIDAR

Wave hello! Robots use sensors, like the LIDAR above this TV, to see the world around them. It works a bit like echolocation, which is when bats use sound to know where things are when they fly in the dark. They do this by emitting noises and listening to the echoes bouncing off objects to figure out what’s around them.

Velodyne’s LIDAR, which stands for Light Detection and Ranging, emits lasers instead of sound. This system sends out 16 laser beams and measures the time it takes for the light to return to figure out how far away things are. These are used on all sorts of robots, like on a self-driving car to create a 3D map of its surroundings helping it know where other cars, people, and objects are to make the right decisions and drive safely.

Wave hello, you’re on TV! You’re looking at one way that a robot sees its environment.

Before robots reason, act, and interact, they have to sense what’s around them. Sensing requires sensors, like the LIDAR above this TV.

LIDAR works a bit like echolocation, which is when bats use sound to know where things are when they fly in the dark. They do this by emitting noises and listening to the echoes bouncing off objects to figure out what's around them.

Instead of yelling in high frequency, Velodyne's 16-beam LIDAR, which stands for Light Detection and Ranging, shoots out lasers. This system sends out 16 laser beams and measures the time it takes for the light to return to figure out how far away things are. These are used on all sorts of robots, like on a self-driving car to create a 3D map of its surroundings. This helps it know where other cars, people, and objects are to make the right decisions and drive safely.

Related

- YouTube: Cassie autonomously navigates around objects

- Research: Depth Estimation from LiDAR and Stereo